"Introducing the AI Inventor:

An Autonomous Discovery Engine.

A Glimpse Into the AI Inventor: An Autonomous Discovery Engine

For the past several months, I have been developing a new kind of AI system. My goal was to move beyond the paradigm of AI as a simple generator of text or images, and towards an architecture that could function as a true partner in discovery—an autonomous agent capable of tackling high-level inventive challenges.

The project is called The AI Inventor, and I want to share a brief look at its architecture, its process, and its potential.

The Premise: A Journey Through a Landscape of Knowledge

The advent of Large Language Models has created an unprecedented repository of codified human knowledge. For the first time, the essential patterns of science, engineering, and commerce exist as a computationally explorable, high-dimensional landscape.

The AI Inventor is an autonomous agent designed to strategically navigate this landscape. It conceptualizes invention as a journey, planting a flag at the coordinates of a Problem and charting a de-risked, evidence-grounded path toward an Ideal Solution.

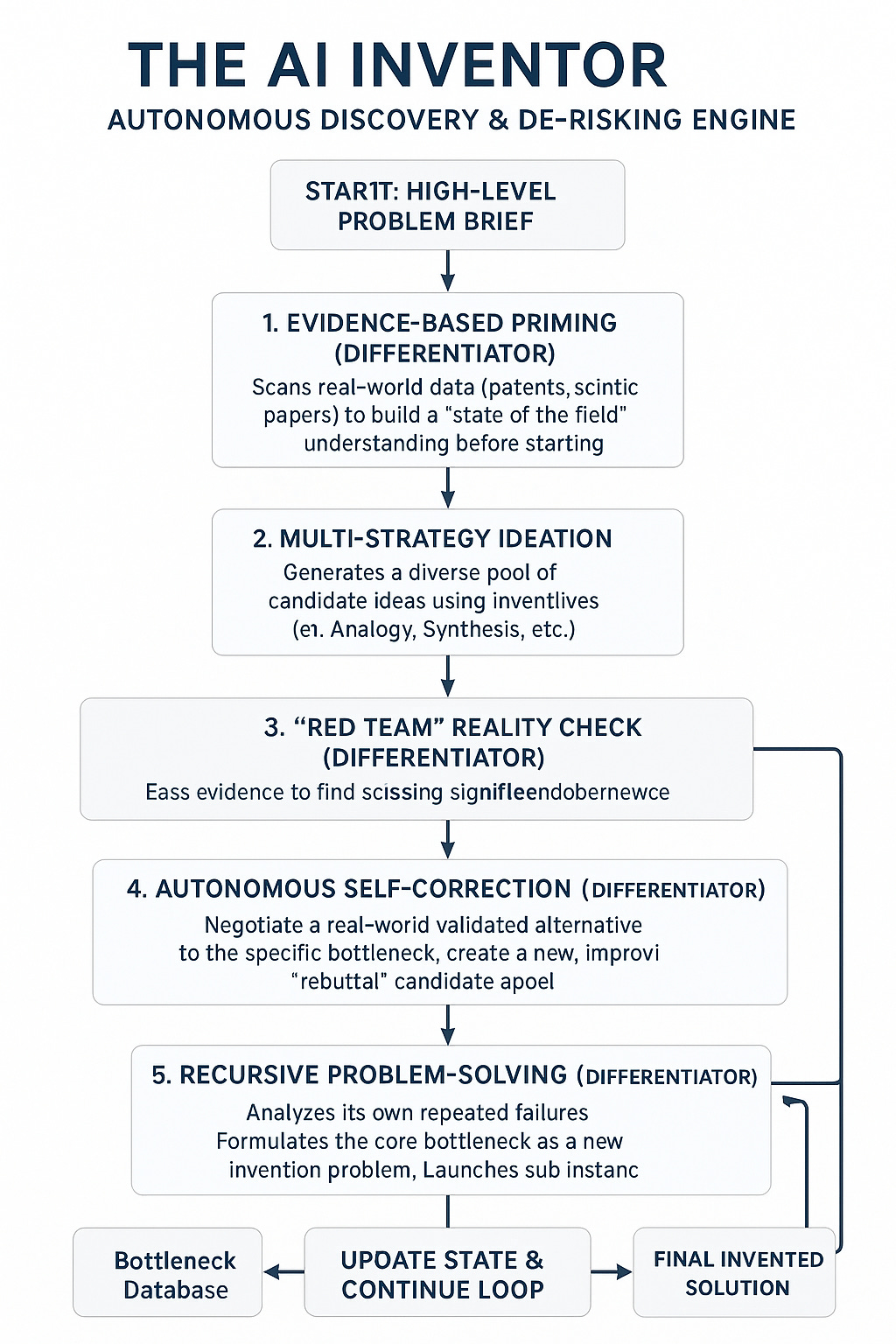

The Architecture: A Five-Stage Cognitive Loop

The core of the system is a structured, five-stage cognitive loop. It is not a simple, linear process, but a dynamic and iterative cycle where the agent reasons, creates, critiques, and corrects itself.

Evidence-Based Priming: The journey doesn't start from a blank slate. The agent first builds a deep, evidence-based understanding of the "state of the field" by scanning patents and scientific literature relevant to the problem.

Multi-Strategy Ideation: The agent then deliberately explores the “conceptual dark space”—the fertile gaps between established nodes of knowledge. Using a portfolio of cognitive tactics like cross-domain analogy and serendipitous synthesis, it generates non-obvious candidate ideas.

"Red Team" Reality Check: This is the critical self-awareness step. Every candidate is immediately subjected to a rigorous, evidence-based reality check. The agent actively tries to disprove its own ideas, searching for the single biggest scientific or technological bottleneck that would prevent the idea from working in the real world.

Autonomous Self-Correction: When a bottleneck is found, the agent doesn't simply discard the idea. It attempts to "negotiate" a solution by searching for validated, real-world technologies that could replace the high-risk component, actively de-risking its own inventions.

Recursive Problem-Solving: This is the system's most unique capability. If the agent repeatedly fails due to the same fundamental roadblock, it recognizes this pattern. It pauses its main mission, formally defines the roadblock as a new, targeted invention problem, and launches a recursive instance of itself to solve it. In essence, it has the ability to stop and invent the tools it needs to continue its journey.

A Case Study Snapshot: Tackling Arterial Plaque

To make this tangible, here is a highly condensed snapshot from a recent run.

The Mission: Invent a biological agent to safely dissolve hardened arterial plaque.

An Early Candidate Idea: "An athero-regenerative therapy using engineered stem cells that secrete plaque-dissolving enzymes."

Stage 3: "Red Team" Reality Check Identifies a Bottleneck:

Bottleneck Identified: Viable Cell Retention on a Stent Platform Through Deployment.

Reason: The immense mechanical shear forces during stent crimping and expansion cause catastrophic death of seeded cells, rendering the core therapeutic mechanism ineffective.

CITATION: [Hoch et al., 2014, Biomaterials]

Stage 4: Autonomous Self-Correction Negotiates an Alternative:

Negotiation Success: The agent searched for and identified the closest validated technology to solve the cell-retention problem.

Alternative Found: CellSheath™ Technology (or similar microporous stent coating/cage systems).

Reasoning: This technology provides a physical barrier that protects cells from crushing and shear forces, with pre-clinical studies showing >70-80% viability retained post-deployment.

CITATION: [Stock, U. A., et al. (2006). The Journal of Thoracic and Cardiovascular Surgery]

The agent then automatically revised its original concept to incorporate this validated, real-world component, creating a new, more robust, and less risky idea to carry forward. This cycle of ideation, critique, and evidence-based correction is what makes the process so powerful.

What Makes This Different

This is not just another application of generative AI. It is a system designed around three core principles:

True Autonomy: It is an agent, not a tool. It manages its own cognitive state, maintains momentum, and pursues a long-term goal with persistence.

Metacognitive Capability: It knows when it is stuck. Its ability to analyze its own repeated failures and launch recursive sub-missions to solve core bottlenecks is a fundamental step toward more general AI problem-solving.

Grounded in Evidence: The architecture is inherently self-critical. By forcing every novel idea through a "Red Team" filter and tethering it to the bedrock of existing scientific and patent literature, the system is designed to produce credible, defensible, and ultimately valuable outputs.

The Road Ahead

The system's core engine is domain-agnostic. While the example shown is biomedical, its logic can be applied to challenges in materials science, climate technology, financial modeling, or any field where innovation is driven by the synthesis of complex information.

The road ahead involves scaling this process—running more invention tests on more powerful machinery in parallel across more domains to further refine the strategic wisdom of the core Orchestrator. The ultimate vision is a powerful engine that can act as a force multiplier for human ingenuity, de-risking the fuzzy front end of innovation and helping us solve hard problems, faster.

I believe this approach represents a meaningful step in the evolution of AI. If this work resonates with you, I would be keen to connect with fellow researchers, investors, and pioneers who are passionate about building the future of discovery.